#19 — Learning CS is uncomfortable. And that's ok.

That's why psychological and social factors like motivation become important

Learning CS Still Requires Struggle

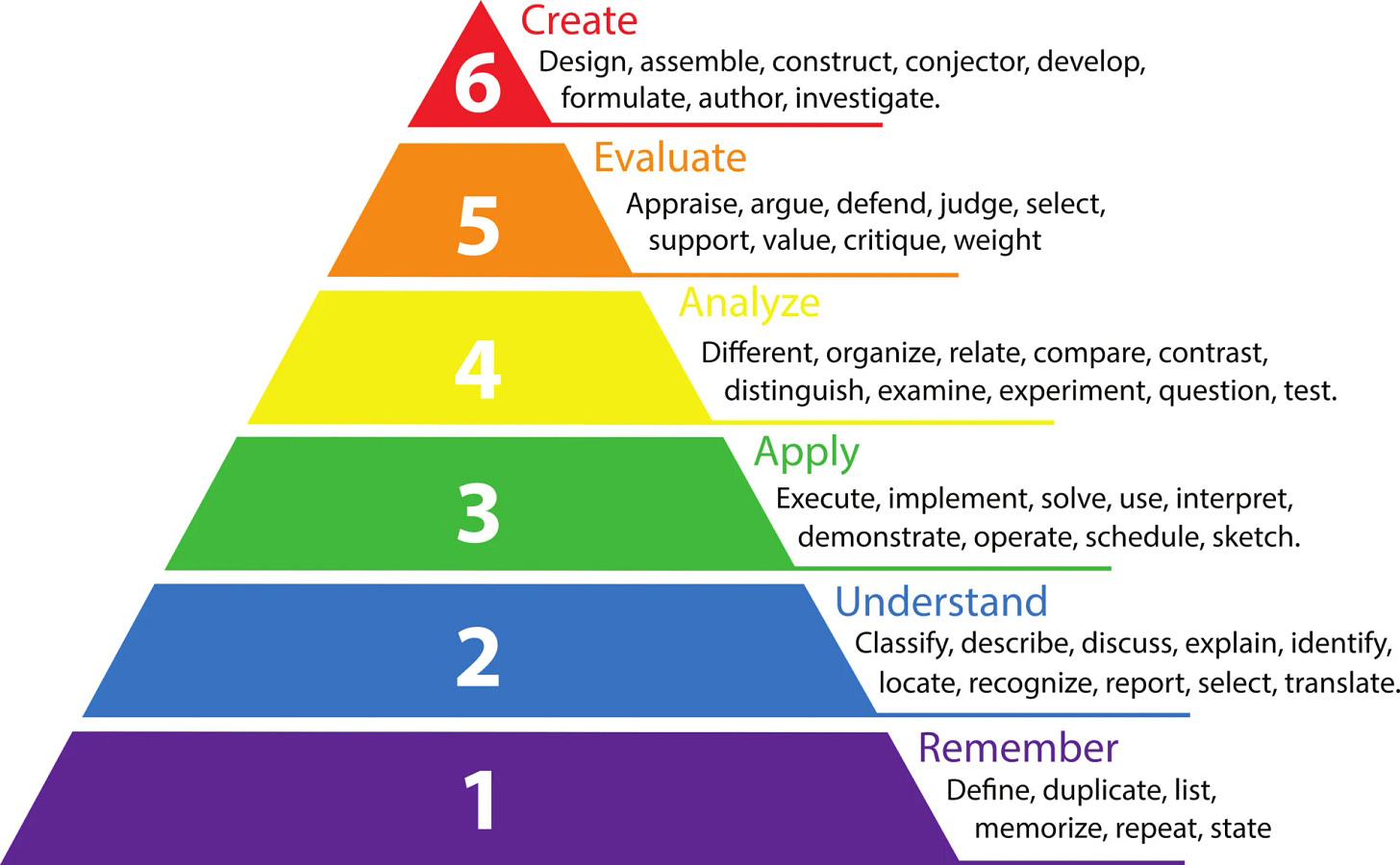

Not everyone is willing to make an effort. And learning computer science is uncomfortable. This is perhaps your greatest strength. Because the more different scenarios you see, the better your problem-solving skills will be. That’s why psychological and social factors become important, e.g., motivation and confidence (Thanks Amin for the idea). There’s this pyramid called Bloom’s Taxonomy that goes from remembering, which is the first elementary step, through understanding, applying, analyzing, evaluating, and creating. AI is impacting each of these steps. This reminds me of when people say they learn by watching TikTok videos, but it’s really an “illusion of learning,” and this can happen to us with AI as well. This reaffirms for me that learning requires internalizing knowledge, having a conceptual understanding, and this, of course, requires effort, discomfort, tolerating unease, and learning something difficult to understand.

Serious question: What fundamentals won’t you let AI take away from you? Do you want to rent your knowledge or own it? I write everything myself. What I do pass to it is the text so it can show me spelling or grammatical errors, but I choose how to fix them.

The Andrej Karpathy episode – It will take a decade to work through the issues with agents

It’s comforting to hear a more realistic view of agents from such a renowned PhD engineer as Andrej. It’s good to remember that we’re not yet where we’d like to be—it will take hard engineering and research work for the next 10 years.

Andrej said a lot of things (some are outside my field and experience; I’ll set those aside). Here are my notes on the ideas that resonated with me the most, but if you’re interested in digging deeper, here’s the full transcript.

🔍 Resources for Learning CS

→ Real-world AI coding: what works, what doesn’t

Matt Maher created this channel in January 2025 focused on AI-supported software development. He shares his experiences, evaluates different tools and models, and explains what works for him and what doesn’t. It’s useful if you want to improve the results you get from AI, as he explains its limitations and the techniques that can be used to overcome them.

→ How Ghostty’s Creator Uses AI Agents

Very good write-up from Mitchell Hashimoto (Ghostty) of how coding with agents works in practice.

→ Choose your browser

A broad competition has emerged to offer alternative ways to access information. Last week I started looking at Perplexity’s Comet, and yesterday OpenAI’s Atlas appeared. Meanwhile, the Edge browser has Copilot, and Google is integrating Gemini into almost everything. My usual browser before Chrome was Firefox, which has a special tab where it offers to integrate whichever AI model you prefer.

→ Asynchronous Programming

I really enjoyed the latest episode of Programming Throwdown with Patrick Wheeler and Jason Gauci on Asynchronous Programming. Before diving into the topic, they talk about AI scams, news, tools, and books.

Good bites from the industry

→ What Angel Wang learned landing a LinkedIn software engineer job

Here are my notes from this rich conversation between Angel Wang and Aman Manazir:

Is LinkedIn project-based for the internship? It varies for each team. Angel’s was a single project, but she knows that some people have individual projects or small tasks. It just varies. It’s very team-dependent.

Not every company uses LeetCode for interviews. Angel got an interview at Khan Academy by applying via a GitHub repo listing internship opportunities. For the first interview, Angel prepared a project and shared the GitHub repository, which was atypical, but luckily, she had a project to show.

People are usually open to helping (they have referral incentives), but you need to build your narrative. Leverage LinkedIn to connect with alumni, but don’t just ask for help; construct a thoughtful message.

On LinkedIn: show genuine interest, be authentic. Don’t use AI; it’s very obvious.

Angel says algorithms class helps you think of edge cases in coding interviews. Writing the structure, proving why, and iterating was a good exercise.

Learn how to use AI to your advantage. For entry-level jobs, learn AI skills to make yourself more competitive.

Content creation as a side project is fine, and you enjoy it more without the pressure. She fears that going full-time into content creation could lead to burnout without the structure.

→ New Vite Documentary from Cult.Repo

Every web dev knows the power of Vite. Cult.Repo launched this Vite documentary on October 9th. It was cool to hear the part of the Vite story Evan You has never spoken about publicly. A solid watch for anyone who cares about modern frontend.

→ Google’s Eng Culture: A Deep Dive

Really enjoyed the deep dive into google’s eng culture. Great work by Gergely Orosz and Elin Nilsson, tech industry researcher for The Pragmatic Engineer and a former Google intern. Proud subscriber. Keep doing this important work!

Putting the focus where it needs to be

This podcast in Spanish about the mission of education in general sparked a lot of reflection for me this week—it can be applied to schools or universities.

For me, this would be a point to consider very seriously if I were applying for a faculty position today. It has made me think that I would like to work at a university that trains the next generation of STEM leaders not only with technical excellence but with character, humility, and a vision for understanding how their work can serve others. Here are the ideas that resonated most with me:

Awareness that you can change the world from within. That feeling that there’s something different here. When you hire teaching faculty, tell the candidates: either you believe in what you’re doing or you’re not useful to me.

Sometimes we want to turn students into something that will integrate into a system and has to start functioning. That’s turning education into something that is simply useful. When education is primarily necessary rather than useful. It is about formation, not information. And precisely, just as happens a bit with literature, its necessity or its utility lies precisely in the fact that it doesn’t seek to be useful. Good literature is a bit like that—it doesn’t seek to be useful, but rather seeks to transmit. That’s it.

A person should not be mediocre because you have talents, man, and you have to respond. And you have to be excellent at what you do. But just as you have to be excellent in math, if you have a good mind, you have to be excellent in love. And you have to be excellent in companionship. And you have to be excellent in sincerity, etc.

That innovation process should be done by putting the focus where it needs to be, because it’s subtle how easy it is to lose focus.

🔍 Resources for Teaching in CS Education

→ Josh Brake on Teaching

Josh Brake’s talk at the MIT Teaching + Learning Lab on cultivating a convivial classroom in the age of AI is already available. Slides are here in case you want to take a look, and the related blog post he wrote the day before the talk is here.

→ GRAILE AI Talks Now on YouTube

If you weren’t able to make it to GRAILE AI, then I have good news for you: all talk recordings are now available on YouTube!

→ 📝 Resource shared by Kristin Stephens-Martinez

Let’s think more carefully about academic meetings. This episode has so many good ideas. If you want to try to improve meetings, pick one thing to try, don’t overwhelm yourself. Experiment and observe how things shift before doing the following change.

🌎 Computing Education Community Highlights

The CS department at Ursinus College is seeking a one-year visiting faculty member. Meanwhile, the University of Utah is hiring an Assistant Professor (Lecturer) in Computing.

Juho‘s group at Aalto has opened a PhD position in Educational Tools for Teaching Generative AI Use to Novice Programmers. You can apply here.

Upcoming events for teaching Computer Science, hosted by UK SIGCSE:

Monday 3rd November at 2pm UTC on Zoom: How Consistent Are Humans When Grading Programming Assignments? with Marcus Messer from King’s College London

Monday 1st December at 2pm UTC on Zoom: The Role of Generative AI in software student collaboration with Juho Leinonen from Aalto University, Finland

Thursday 8th January 2026 in person in Durham: Annual ACM Computing Education Practice (CEP) conference at Durham University

Monday 2nd February 2026: Semantic Waves with Jane Waite and Paul Curzon from Queen Mary, University of London and the Raspberry Pi Computing Education Research Centre in Cambridge, UK

Survey on technology adoption and learning preferences in Computing Science Higher Education.

The Thirtieth Annual CCSC Northeastern Conference is calling for participation. You can also help this group that studies the effect of native language on learning to program.

Join this interactive webinar on Oct. 28 on enhancing your introductory programming courses (postsecondary and high school) with subgoal labeling. Drs. Briana Morrison (University of Virginia) and Adrienne Decker (University at Buffalo) will show us how to use worked examples and practice problems to help students grasp key programming concepts more effectively. Don’t miss this opportunity to bring proven strategies directly into your classroom! Register athttps://bit.ly/subgoal_labels. You’ll get hands-on guidance with examples you can adapt for your own courses and discover a free e-book packed with worked examples, practice problems, and assessments for both Python and Java (available at https://www.cs1subgoals.org/

🤔 So many thoughts to ponder today...

People are jumping on the Substack wave.

Love Ethan Mollick’s latest “An Opinionated Guide to Using AI Right Now”.

Good analogy here:

It helps to remember what happened with compilers in the 1950s to understand AI-assisted programming today.

The transformative impact of the compiler on software development is hard to overstate. Now, you could write programs in a language like C with constructs like conditionals (if, else), loops (for, while), and functions to more naturally communicate what your code should do without needing to spell out every detail of the assembly code it would be converted into. Not only could you express your ideas more easily and in a format that was much more human-readable, but you could work faster because the code to perform an operation in C was much more compact than the number of assembly instructions it corresponded to. One line in C might map to ten lines of assembly.

I agree with Josh that anyone who isn’t a software engineer may think that learning code doesn’t make sense anymore, but it will still be necessary to understand why, and there is significant value in understanding what you want to generate in order to interact more effectively with the tool.

Another interesting point is that continuous learning is more necessary than ever. Josh mentions two ideas that I find interesting: ride the wave (try to stay up to date, understand the fundamentals of AI), and skate to where the puck is headed (keep your eyes on the horizon; when we pay attention to the smallest details or go too deep into AI, we lose sight of the big picture and can even burn out).

Balaji’s idea that AI really means amplified intelligence makes a lot of sense after reading Josh:

Even if you think that AI is going to drastically reshape the way that code is written today, it’s still going to run on the same processors. AI’s amplification of your ability to create software means that there is even more value in learning the fundamentals. If you live by the vibe, you’ll die by the vibe. The real unlock is to use it to continue to push yourself and to deepen and expand your rare and valuable skills. If you focus on career capital, you’ll be in good shape. Developing expertise requires concerted effort, the same as ever.

These students want no part of AI in their core curriculum, and Paul Blaschko believes they are right. Loved his approach on rethinking assignments and assessment itself:

The Observer editors are right: AI forces us to confront the purpose of our courses. Alternative assessments — oral exams, in-class writing, project portfolios — might help, but they aren’t the heart of the solution. The heart is rethinking assignments and assessment itself: designing courses that value reasoning, collaboration, and formation over mere output.

Interesting at the same time what Stanford says about universities being crucial for learning AI.

Marc Watkins (Rhetorica) on the shameless wink-wink marketing campaign from Perplexity Comet

We badly need to move beyond talk of AI slop, model collapse, or general criticisms that AI is bad at performing all tasks. Instead, we should start being honest when these tools work and are effective, while honing meaningful criticism of when they are not and cause negative and often lasting impacts on our culture. Selling AI to students as a means to avoid learning must be one of those, and companies that engage in it should be put on notice that this practice isn’t acceptable and has consequences.

This post by Kirupa on adapting AI-generated code got me thinking:

As we rely on AI assistants to write more and more of our code, they will generate solutions that are optimized for them as opposed to being optimized for us humans. To put it differently, if we put the AI in the driver’s seat, it may take routes to get to the destination that might be different from ones we’d take. The solution sometimes isn’t to fight the AI assistant. The solution is to better understand what the AI assistant is doing so that we can continue collaborating effectively with it.

Here’s another great way to provide context in AI-driven coding. This is an article worth reading carefully. On a related note, I found this article on why AI coding still fails in enterprise teams interesting. You’ve heard bold claims of AI writing 30-50% of code at some companies. But large, complex, and long-lived codebases – with strict quality, security, and maintainability requirements – demand an entirely different approach to AI adoption.

And if you’re still wondering what the best programming language is in this age of AI, Jason has the answer, and I agree 100%.

📌 The PhD Student Is In

→ Reviewing Papers for TS 2026

Last Sunday I dedicated half the day to reviewing 5 posters assigned for TS 2026, and when I thought I was done, I’ll have to review 3 more papers this weekend. That’s okay—I find it interesting to think about other topics in my field. It’s always difficult to evaluate colleagues’ work, but I tried to be as fair as possible. It promises to be a great conference. I hope I can go as a volunteer—I’ll send in the application soon.

→ Letting Go of Research Ideas

In my short life as a researcher, one of the things I am learning most is to accept rapid prototyping and abandon ideas that seemed promising but that both the advisor and the project want you to take in a different direction. You have to accept it, even though it can be difficult at times because of the hours you have put into it.

→ Presenting Work Outside My Area

This week I had to present this paper accepted at SoCC ‘24. Great collaborative work with my colleague Mohammad Sameer! Even though this isn’t my research area, it helped me learn a bit more about AIOps agents. Thanks to Manish for providing me with the presentation and code repository. I’ll be keeping a close eye on him.

🪁 Leisure Line

Since I arrived in Texas, I’ve been going to College Station every other Friday to train and mentor college students, mostly from Texas A&M! It’s a very welcoming place. The room was especially Texan last week. Could it have something to do with Aggie pride over being 7-0 and 3rd in the national college football rankings?

Facing the reality of homelessness in downtown Houston this week. Letting them speak is the most important thing, reminding them and reminding ourselves of their inherent value. We try to encourage those who are suffering and try to give them hope.

Afterward, I tried Chipotle for the first time. Highly recommend the burritos.

📖📺🍿 Currently Reading, Watching, Listening

This Coldplay song reminded me that in life we will face difficulties and obstacles, but enduring that suffering isn’t enough. Rather, the idea I like is what I take from these lyrics: we need to have something worth fighting for. That brings a lot of peace. That reason we get up every morning. Will it be just as difficult if we have that purpose? Yes, of course, but we’ll endure it better because we have a good purpose that’s worthwhile. I think this is a challenge for CS educators: getting students to have a clear direction to strive toward despite all the obstacles.

This week I’ve been watching the series “Halt and Catch Fire.” It’s been around for a while now, but the dramatic stories of these entrepreneurs involved in technology development, from the first PCs to the World Wide Web, could easily happen today. Great actors and gripping episodes. And some of the stories, especially from the first season, are inspired by real events. The first two seasons are available on the Philo platform.

6 years later and I still can’t believe how incredible this cover is:

I’m excited to be reading To Light a Fire on the Earth by Bishop Barron. Given what my life has been like over the last months, my reading time has taken a significant hit, but what I’ve heard and read so far about this book has made me excited to dig in.

Quotable 💬

Many of the best changes you can make in life are on the other side of some cost you’re painfully familiar with, but where the payoff is a kind of joy you can’t yet comprehend.

That's all for this week. Thank you for your time. I value your feedback, as well as your suggestions for future editions. I look forward to hearing from you in the comments.

Quick Links 🔗

🎧 Listen to Computing Education Things Podcast

📖 Read my article on Vibe Coding Among CS Students

💌 Let's talk: I'm on LinkedIn or Instagram

Spot on. Thank you for this insightful piece on the discomfort inhereent in truly owning knowledge, which is vital when navigating the AI landscape.